It occurred once more: one more cascading failure of know-how. Lately we’ve had web blackouts, aviation-system debacles, and now a widespread outage resulting from a difficulty affecting Microsoft methods, which has grounded flights and disrupted a spread of different companies, together with health-care suppliers, banks, and broadcasters.

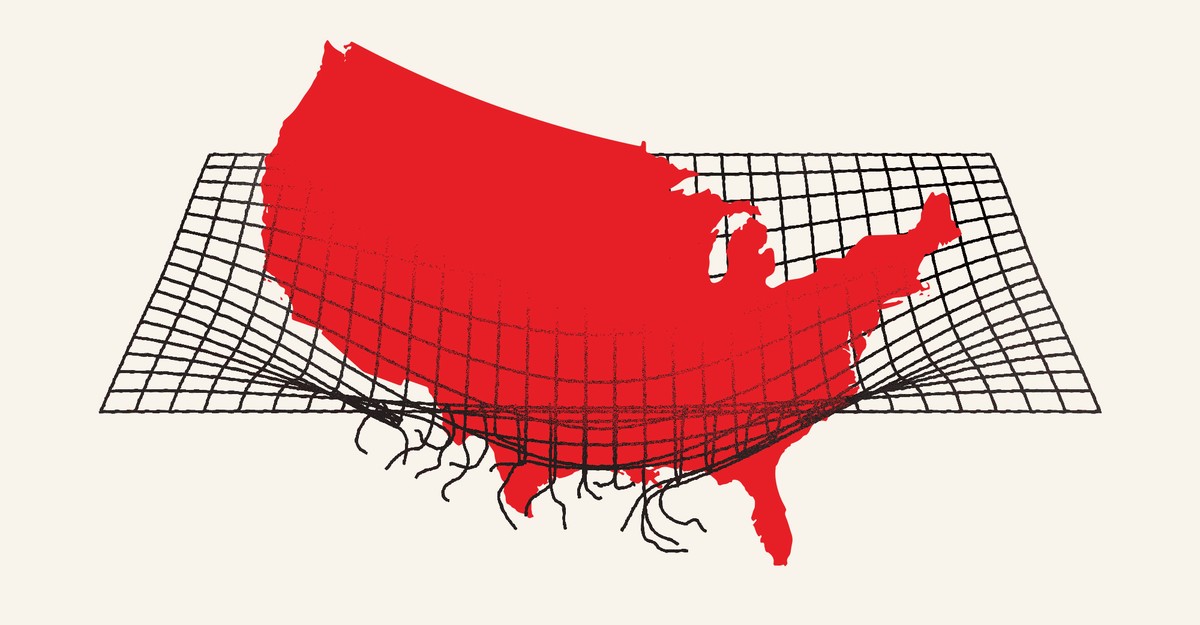

Why are we so unhealthy at stopping these? Basically, as a result of our technological methods are too difficult for anybody to completely perceive. These usually are not pc packages constructed by a single particular person; they’re the work of many palms over the span of a few years. They’re the interplay of numerous parts that may have been designed in a particular means for causes that nobody remembers. Lots of our methods contain huge numbers of computer systems, any considered one of which could malfunction and convey down all the remainder. And lots of have hundreds of thousands of strains of pc code that nobody solely grasps.

We don’t respect any of this till issues go flawed. We uncover the fragility of our technological infrastructure solely when it’s too late.

So how can we make our methods fail much less typically?

We have to get to know them higher. One of the simplest ways to do that, mockingly, is to interrupt them. A lot as biologists irradiate micro organism to trigger mutations that present us how the micro organism operate, we will introduce errors into applied sciences to grasp how they’re liable to fail.

This work typically falls to software-quality-assurance engineers, who take a look at methods by throwing a number of completely different inputs at them. A preferred programming joke illustrates the essential concept.

A software program engineer walks right into a bar. He orders a beer. Orders zero beers. Orders 99,999,999,999 beers. Orders a lizard. Orders -1 beers. Orders a ueicbksjdhd. (Up to now, so good. The bartender could not have been capable of procure a lizard, however the bar continues to be standing.) An actual buyer walks in and asks the place the toilet is. The bar bursts into flames.

Engineers can induce solely so many errors. When one thing occurs that they didn’t anticipate, the community breaks down. So how can we broaden the vary of failures that methods are uncovered to? As somebody who research complicated methods, I’ve just a few approaches.

One is named “fuzzing.” Fuzzing is kind of like that engineer on the bar, however on steroids. It includes feeding enormous quantities of randomly generated enter right into a software program program to see how this system responds. If it doesn’t fail, then we could be extra assured that it’ll survive the actual and unpredictable world. The primary Apple Macintosh was bolstered by the same method.

Fuzzing works on the degree of particular person packages, however we additionally have to inject failure on the system degree. This has grow to be often known as “chaos engineering.” As a manifesto on the follow factors out, “Even when all the particular person providers in a distributed system are functioning correctly, the interactions between these providers could cause unpredictable outcomes.” Mix unpredictable outcomes with disruptive real-world occasions and also you get methods which are “inherently chaotic.” Manufacturing that chaos within the engineering part is essential for lowering it within the wild.

Netflix was an early practitioner of this. In 2012, it publicly launched a software program suite it had been utilizing internally known as Chaos Monkey that randomly took down completely different subsystems to check how the corporate’s total infrastructure would reply. This helped Netflix anticipate and guard in opposition to systemic failures that fuzzing couldn’t have caught.

That being mentioned, fuzzing and chaos engineering aren’t excellent. As our technological methods develop extra complicated, testing each enter or situation turns into unattainable. Randomness might help us discover further errors, however we are going to solely ever be sampling a tiny subset of potential conditions. And people don’t embrace the sorts of failures that distort methods with out totally breaking them. These are disturbingly troublesome to root out.

Contending with these realities requires some epistemological humility: There are limits to what we will find out about how and when our applied sciences will fail. It additionally requires us to curb our impulse guilty system-wide failures on a particular individual or group. Trendy methods are in lots of instances just too massive to permit us to level to a single actor when one thing goes flawed.

Of their e book, Chaos Engineering, Casey Rosenthal and Nora Jones provide just a few examples of system failures with no single wrongdoer. One includes a big on-line retailer who, in an effort to keep away from introducing bugs in the course of the vacation season, quickly stopped making modifications to its software program code. This meant the corporate additionally paused its frequent system resets, which these modifications required. Their warning backfired. A minor bug in an exterior library that the retailer used started to trigger reminiscence points—issues that frequent resetting would have rendered innocent—and outages ensued.

In instances like this one, the fault is much less more likely to belong to anyone engineer than to the inevitable complexity of contemporary software program. Subsequently, as Rosenthal and Jones argue—and as I discover in my e book Overcomplicated—we should address that complexity by utilizing methods equivalent to chaos engineering as a substitute of making an attempt to engineer it away.

As our world turns into extra interconnected by huge methods, we have to be those breaking them—time and again—earlier than the world will get an opportunity.

:max_bytes(150000):strip_icc()/ditsyfloralnailssocial-20664a2542f64a8880d7dd8d387a738a.png)