This text was initially revealed by Quanta Journal.

An image could also be value a thousand phrases, however what number of numbers is a phrase value? The query might sound foolish, nevertheless it occurs to be the muse that underlies massive language fashions, or LLMs—and thru them, many fashionable purposes of synthetic intelligence.

Each LLM has its personal reply. In Meta’s open-source Llama 3 mannequin, phrases are cut up into tokens represented by 4,096 numbers; for one model of GPT-3, it’s 12,288. Individually, these lengthy numerical lists—often called “embeddings”—are simply inscrutable chains of digits. However in live performance, they encode mathematical relationships between phrases that may look surprisingly like which means.

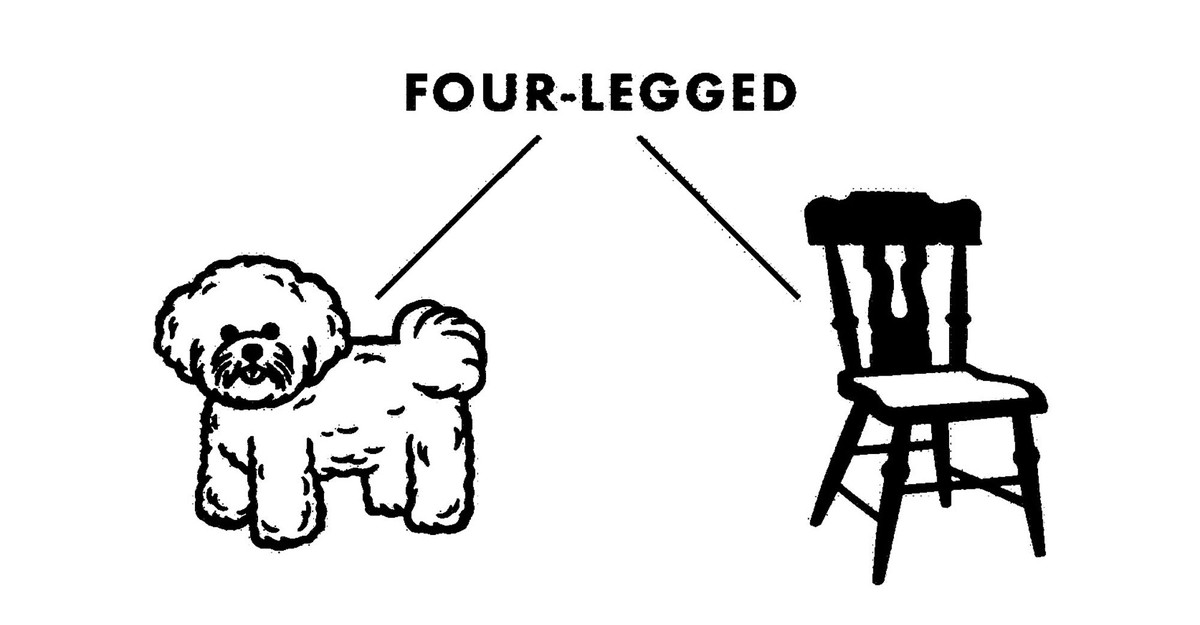

The fundamental concept behind phrase embeddings is many years previous. To mannequin language on a pc, begin by taking each phrase within the dictionary and making an inventory of its important options—what number of is as much as you, so long as it’s the identical for each phrase. “You’ll be able to nearly consider it like a 20 Questions recreation,” says Ellie Pavlick, a pc scientist finding out language fashions at Brown College and Google DeepMind. “Animal, vegetable, object—the options may be something that individuals suppose are helpful for distinguishing ideas.” Then assign a numerical worth to every function within the checklist. The phrase canine, for instance, would rating excessive on “furry” however low on “metallic.” The consequence will embed every phrase’s semantic associations, and its relationship to different phrases, into a singular string of numbers.

Researchers as soon as specified these embeddings by hand, however now they’re generated robotically. As an example, neural networks may be skilled to group phrases (or, technically, fragments of textual content known as “tokens”) in keeping with options that the community defines by itself. “Possibly one function separates nouns and verbs actually properly, and one other separates phrases that are likely to happen after a interval from phrases that don’t happen after a interval,” Pavlick says.

The draw back of those machine-learned embeddings is that, not like in a recreation of 20 Questions, most of the descriptions encoded in every checklist of numbers aren’t interpretable by people. “It appears to be a seize bag of stuff,” Pavlick says. “The neural community can simply make up options in any method that may assist.”

However when a neural community is skilled on a selected job known as language modeling—which right here includes predicting the subsequent phrase in a sequence—the embeddings it learns are something however arbitrary. Like iron filings lining up underneath a magnetic discipline, the values grow to be set in such a method that phrases with comparable associations have mathematically comparable embeddings. For instance, the embeddings for canine and cat shall be extra comparable than these for canine and chair.

This phenomenon could make embeddings appear mysterious, even magical: a neural community in some way transmuting uncooked numbers into linguistic which means, “like spinning straw into gold,” Pavlick says. Well-known examples of “phrase arithmetic”—king minus man plus lady roughly equals queen—have solely enhanced the aura round embeddings. They appear to behave as a wealthy, versatile repository of what an LLM “is aware of.”

However this supposed data isn’t something like what we’d discover in a dictionary. As a substitute, it’s extra like a map. For those who think about each embedding as a set of coordinates on a high-dimensional map shared by different embeddings, you’ll see sure patterns pop up. Sure phrases will cluster collectively, like suburbs hugging an enormous metropolis. And once more, canine and cat could have extra comparable coordinates than canine and chair.

However not like factors on a map, these coordinates refer solely to at least one one other—to not any underlying territory, the way in which latitude and longitude numbers point out particular spots on Earth. As a substitute, the embeddings for canine or cat are extra like coordinates in interstellar area: meaningless, besides for the way shut they occur to be to different identified factors.

So why are the embeddings for canine and cat so comparable? It’s as a result of they reap the benefits of one thing that linguists have identified for many years: Phrases utilized in comparable contexts are likely to have comparable meanings. Within the sequence “I employed a pet sitter to feed my ____,” the subsequent phrase may be canine or cat, nevertheless it’s most likely not chair. You don’t want a dictionary to find out this, simply statistics.

Embeddings—contextual coordinates, based mostly on these statistics—are how an LLM can discover a good place to begin for making its next-word predictions, with out counting on definitions.

Sure phrases in sure contexts match collectively higher than others, generally so exactly that actually no different phrases will do. (Think about ending the sentence “The present president of France is called ____.”) In keeping with many linguists, an enormous a part of why people can finely discern this sense of becoming is as a result of we don’t simply relate phrases to at least one one other—we really know what they consult with, like territory on a map. Language fashions don’t, as a result of embeddings don’t work that method.

Nonetheless, as a proxy for semantic which means, embeddings have proved surprisingly efficient. It’s one motive why massive language fashions have quickly risen to the forefront of AI. When these mathematical objects match collectively in a method that coincides with our expectations, it looks like intelligence; once they don’t, we name it a “hallucination.” To the LLM, although, there’s no distinction. They’re simply lists of numbers, misplaced in area.

:max_bytes(150000):strip_icc()/charcoal-c5c4b83a0918441a93f0547975f27fc5.png)